Website AI Auditor

Last Updated: Jan 12, 2026

Is your website invisible to AI?

Traditional SEO tools check if Googlebot can crawl your site. Texavor's Website Auditor checks if AI Agents (like GPTBot, CCBot, and Anthropic-AI) can read, understand, and cite your content.

Why Audit for AI?

AI Search engines work differently than Google keywording:

- Crawl: They need specific permission (robots.txt) to read your site.

- Understand: They rely heavily on Schema (Structured Data) to know "Who wrote this?" and "Is this a trusted brand?".

- Process: They prefer high "Content Density" (clean HTML) over messy, script-heavy pages.

Understanding Your Score

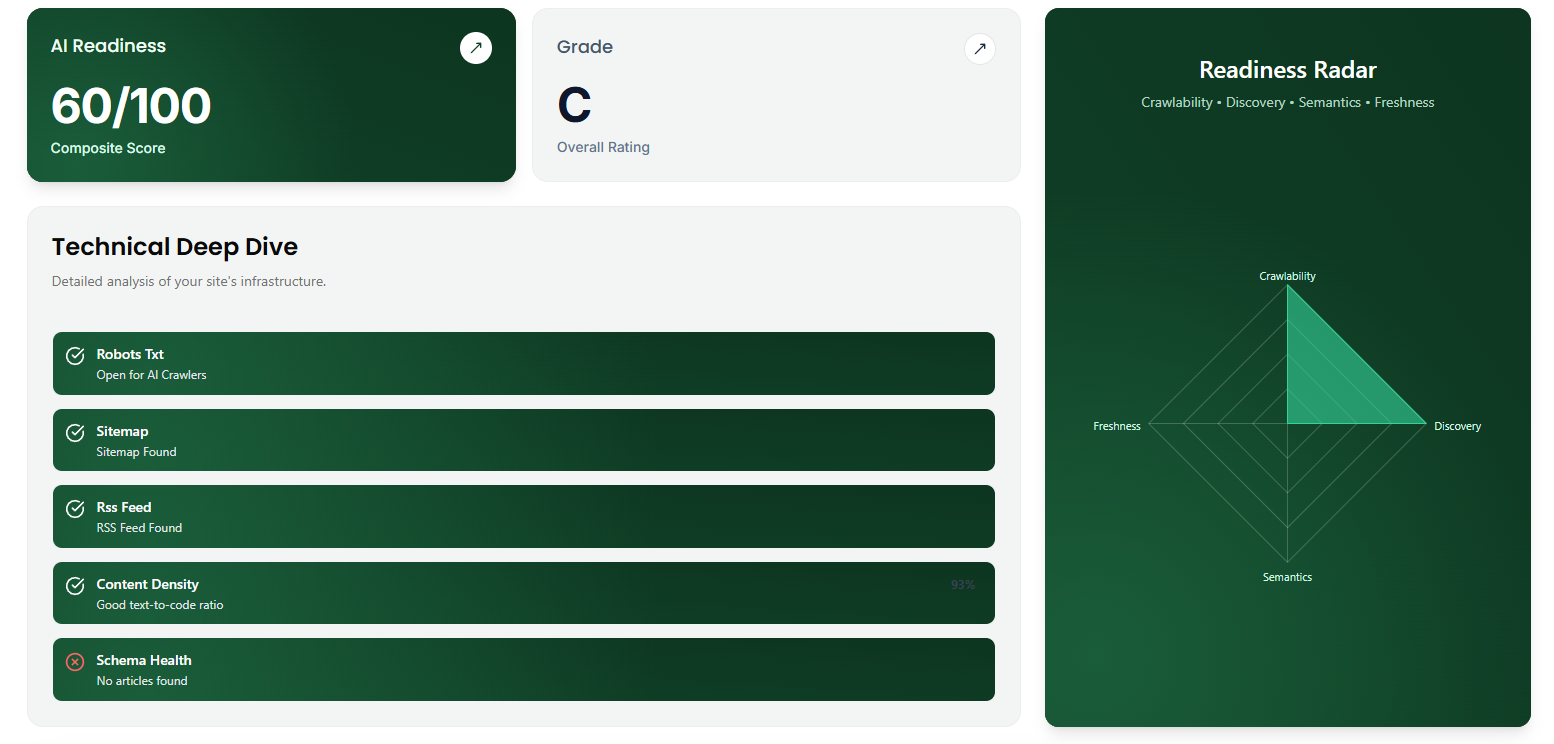

The Auditor gives you an AI Readiness Score (0-100) based on 4 pillars:

1. Crawler Access (Safety)

- What we check: Your

robots.txtfile. - The Goal: Ensure you are NOT blocking

User-agent: GPTBotorCCBot. - Fix: If this fails, update your robots.txt to allow these agents.

2. Semantics (Schema)

- What we check: JSON-LD Schema markup on your homepage and recent articles.

- The Goal: We look for

Organizationschema (Brand Identity) andArticle/BlogPostingschema. - Why it matters: This tells the AI who you are mathematically. Without it, you are just text.

3. Discovery

- What we check: Sitemap.xml and RSS Feeds.

- The Goal: Ensure agents can find your new content immediately.

4. Freshness

- What we check: The

dateModifiedtags on your last 10 posts. - The Goal: AI prefers content updated in the last 12 months.

Improving Your Score

- Grade A (90-100): Fully optimized. Likely to be cited.

- Grade B (70-89): Good, but missing some semantic signals.

- Grade F (<60): You are likely blocking AI crawlers or have no schema.

Support & Resources

Need help publishing your content strategy?

- 📧 Email Support: hello@texavor.com

- 📚 Documentation: Browse the full guide